Ethical AI (Part II: Systematicity)

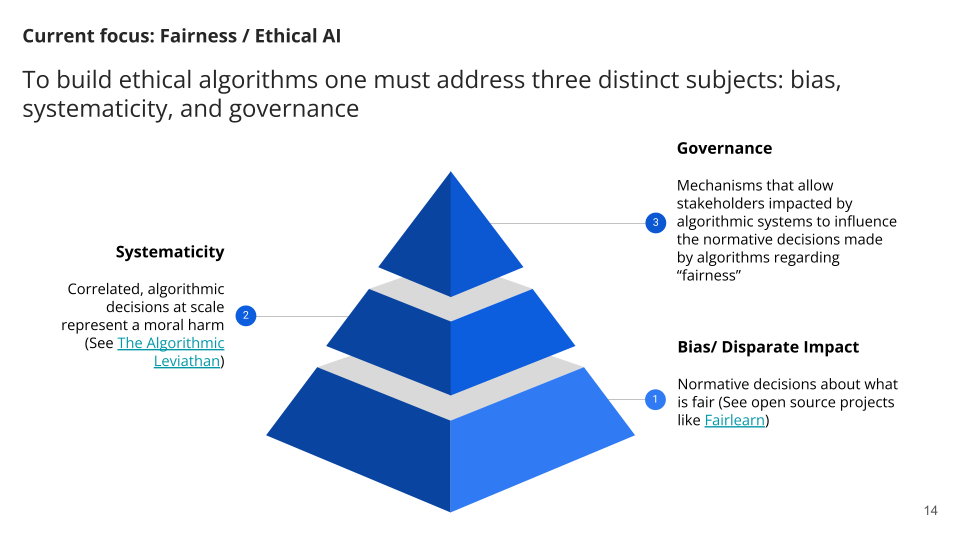

This is the second of three articles in a series covering our approach to building an Ethical AI Framework. AdeptID’s approach to Ethical AI (which we sometimes call “the Pyramid”) has three components:

-

Mitigating Bias

-

Addressing Systematicity

-

Improving Governance

This second post approaches the topic of addressing systematicity.

Background

In February, we shared that, at their core, algorithms are descriptions of step-by-step actions that need to happen in order to achieve a specific outcome. While algorithms have become increasingly sophisticated, users and practitioners alike are finding it harder to break down how they work in application.

Research and writing on Ethical AI has definitely expanded our team’s vocabulary. Thanks to our advisor Dr. Kathleen Creel, we’ve been using a new term, Systematicity, quite a bit.

Systematicity is not a Police song, but a philosophical concept regarding the correlation of errors. In order to talk about Systematicity, we should first discuss arbitrary algorithmic decision-making. SO much of this thinking comes from our advisor, Dr. Kathleen Creel, and her work at Stanford on the Algorithmic Leviathan.

Arbitrariness

Let’s start with three understandings of arbitrariness, which in our case describes a decision that is:

-

Unpredictable

-

Unconstrained by rules or standards

-

Unreasonable or nonsensical

We believe that models that make decisions that meet the above criteria pose a moral harm when it is applied to individuals’ futures.

Now, let’s give some talent-related (and very familiar)- examples for each of these understandings.

-

Unpredictable:

So many hiring decisions cannot be predicted based on the available information. We have an assumption that the person hired for a job is someone who meets the criteria of a job description, but I think we all agree that this is rarely true (this is why we’re so wary of analysis based purely on job postings). These unpredictable decisions, made by people “in the real world”, are part of what train our algorithms, which, if left unmonitored, end up taking on the same form of unpredictability (and arbitrariness).

-

Unconstrained by rules or standards:

Another characteristic of an arbitrary decision is that it is untethered to any rules, standards, and compliance.

Of course, algorithms themselves follow the rules we’ve given them (though we don’t always know the implications of those rules). Nevertheless, so many job searches, career progressions, and hiring decisions do not follow rules whatsoever. That serendipity can be positive, but it is opaque.

The more that a decision-making process can be made transparent – by sharing the thought process and rationale – the more it can be made legible to models.

Irrespective of implications for algorithms, incorporating rules and standards into hiring processes is also one of the most reliable ways to make them less biased.

-

Unreasonable or nonsensical:

Lastly, arbitrary can be defined as “unreasonable” or “nonsensical”. This is the weird

sibling of the above. There are so many things that happen to people’s careers that are byproducts of being “right place, right time” or the reverse. Covid-19 presented an unpredictable event for many careers, but the cascading impacts of the pandemic, its geo-political aftershocks, and the reactions of each living person have been so chaotic that “unpredictable” doesn’t quite capture the effect on individual careers.

Nonsensical forces impact any career. That isn’t a judgment as much as an observation – but it does have implications if those nonsensical impacts are correlated, rather than purely random.

Arbitrariness, as defined above, shows up as errors in our models. While it sounds bad, the presence of errors in any statistical exercise is inevitable (and amoral). However, we need to test our models to ensure that these errors are not correlated – that there is no Systematicity.

Systematicity and Overfitting

When we say that linguistic capacities are systematic, we are talking about the fact that an ability to produce and/or understand some sentences is intrinsically connected to an ability to produce and/or understand certain others.

Arbitrary algorithmic decisions can wrong individuals. But arbitrariness alone isn’t necessarily a moral concern, except when special circumstances apply – particularly, when a person is excluded from a broad range of opportunities based on arbitrary algorithmic decisions.

Arbitrariness at scale stems from the Goldilocks challenge facing machine learning: the difficulty of ensuring that a model learns neither too little nor too much.

The model that learns too much “overfits.” By memorizing the training data rather than learning generalizable patterns, it learns patterns based on the noise in the training data that fail to generalize beyond the initial context. The model that learns too little “underfits.” These models miss generalizable patterns present in its training data.

Automated decision-making systems that overfit by learning an accidental pattern in the dataset will produce a systematic disadvantage to some number of people. This happens when the classifier identifies a consistent set of features that it can systematically enforce to exclude a particular grouping of people, without generalizing beyond the training data.

As we pointed out in our article on mitigating bias, arguably the largest cause for worry is that people affected by systemic arbitrariness aren’t typically a random group of people, but might be members of disadvantaged groups (protected by antidiscrimination law). So what may appear as an isolated or irrational incident at an individual level may actually indicate disparate impact discrimination at scale.

Possible Solutions to Systematicity

There’s a lot of discussion around how the machine learning community can address systematicity at scale, but a lack of solutions.

In an ideal state, we could address the arbitrariness by finding all individuals who have been mis-classified and correcting the error of their classification, or by finding all arbitrary features and removing them. But if it were possible to algorithmically identify the individuals who had been mis-classified, they wouldn’t have been mis-classified in the first place.

There’s also an industry suggestion to address the algorithmic error by adding a “human in the loop” for checking results by using a separate method to evaluate a randomly selected subset of the classifications. But our team isn’t convinced this is an ethical alternative, as adding an extra method, orchestrated by human judgment, re-introduces the likelihood for bias.

One sound proposal for a way to reduce bias in contemporary algorithmic decision-making systems is to “audit” the systems by running them through a series of tests and check for plausibility, robustness, and fairness. It might be difficult for an auditor or even an affected person to prove that a complex nexus of intersecting identities caused this algorithmic decision-making system to uniformly misclassify her.

Randomization as Solution

Predictive models that are wrong in a consistent way are actually not random or coincidental. What if we were to introduce randomization?

Imagine a pool of applicants for a job consisting of 1000 people, of whom 10 are the most qualified applicants and the rest are unqualified.

Let’s say the most qualified applicants are Angel, Bob, Carmen, Davante, Erin, etc.

The most successful classifier might recommend the first nine most qualified applicants, Angel through Indira, but reject the tenth, Juan, leaving it with a 99.99% unweighted success rate.

An equally successful classifier might accept Bob through Juan but reject Angel, leaving it with a similar 99.99% unweighted success.

A third classifier, only slightly less successful than the other two, might reject Bob and Carmen but accept the other eight, leaving it with a 99.98% success rate.

Therefore, the differences between the most successful classifiers might be differences in success on individuals rather than differences in optimality, as in this case where classifiers have similar performance, but different false negatives.

At AdeptID, we believe the case for randomization is still stronger in contexts where the algorithm is reliant on several arbitrary features and doesn’t achieve its aims.

With the same use case as the applicant pool example above, let’s imagine a screening tool that identifies only two or three qualified applicants among the ten it puts forward. The employer will surely be motivated to improve its screening tool to achieve its ends. At any given time, the employer is likely to leverage a tool that they believe is good. If correct in their belief, introducing randomness helps distribute opportunity without any loss to the employer, given multiplicity. And if mistaken, the employer will understand that introducing randomness still distributes that burden in a way that prevents systemic exclusion.

The advent of AI has been accompanied by a growing awareness of digital technology’s potential to cause harm, whether through discrimination or violations of privacy. Policymakers are still debating how to address these harms but, in the absence of laws, some institutions have taken an ethics approach, defining principles of ethical behavior and committing to uphold them – with varying degrees of credibility.

Next month, we’ll close out our Ethical AI series by discussing how our framework calls for, and will aim to implement, ethical, democratic governance.

AdeptID’s three-tiered approach

Over the past several months, the team at AdeptID has built a “Pyramid” of what will be our Ethical AI Framework.

-

At a base level, we need to address Algorithmic Bias.

-

Once we’ve accomplished this, we move onto our second phase, which is Addressing* Systematicity, or correlated errors.

-

Lastly, this framework needs to be responsibly governed, and requires a democratic Governance Model.

Let’s keep talking

AI, machine learning, algorithms… whatever we call them, they have tremendous potential to improve talent outcomes at scale provided they are applied responsibly. We’re excited to have this conversation with stakeholders (talent, employers, training providers) and fellow practitioners alike.

For press inquiries, please contact [email protected].