Ethical AI at AdeptID (Part I: Mitigating Bias)

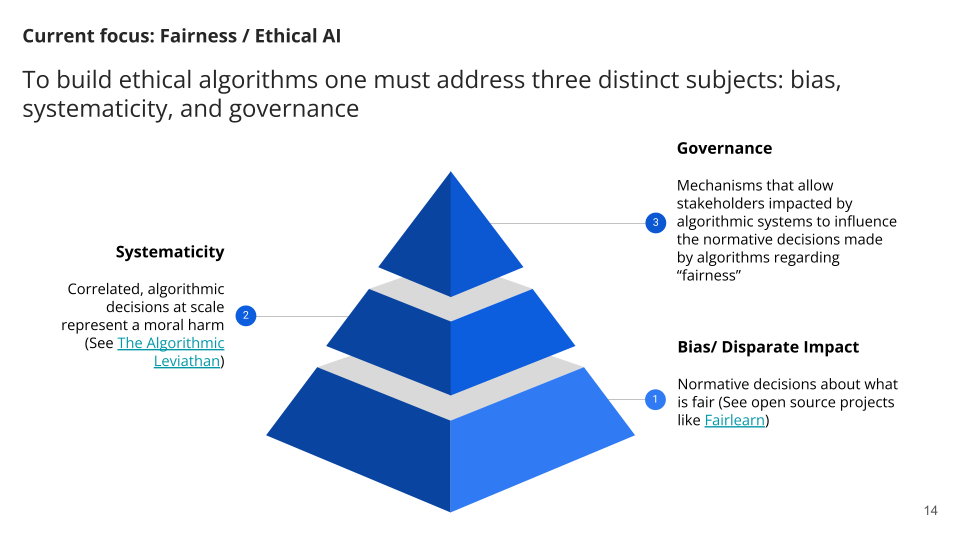

This is the first of three articles in a series covering our approach to building an Ethical AI Framework. AdeptID’s approach to Ethical AI (which we sometimes call “the Pyramid”) has three components:

-

Mitigating Bias

-

Addressing Systematicity

-

Improving Governance

This first post addresses mitigating bias.

Algorithms all around

The world we live in is governed by algorithms and designed in code. This is not the start of a science fiction novel, this is February, 2022.

Nearly 90%* of the global population relies on and leverages algorithms daily, with tasks varying in complexity – from simply using their phone to order a pizza, to passively maneuvering trades in the stock market – yet the concepts of coding and applying algorithms remain mysterious to most who make use of them.

Until recently, algorithms were exclusive to the domain of computer scientists. Now, they represent a pervasive ritual and play an integral role to achieving successful outcomes in countless business models and policies, across sectors and demographics.

Some reports go so far as claiming that we live in the Age of the Algorithm. But algorithms are not that new. Algorithms of some form have been applied and evolved over centuries, and are one of the most common instruments of knowledge sharing.

As algorithms have become increasingly sophisticated, we’ve often found it harder to break down how they work in application. At their core, algorithms are simply descriptions of step-by-step actions that need to happen in order to achieve a specific outcome.

Algorithms for Talent

The labor market is increasingly turning to algorithms and machine learning to fill hundreds of thousands of open jobs, with the hope of bringing greater diversity into the workforce at large, and broadening our labor economics. But many employers shy away from adopting such AI software, and we can understand why.

When it’s misapplied, AI for recruiting and hiring processes can easily result in discrimination against individuals and damage to companies’ reputations. That’s because AI tech runs on algorithms which are informed by large data sets, then uses that data to evolve and develop. When models are trained on biased data, they produce biased outcomes.

In 2014, Amazon unveiled an experimental hiring tool, which was designed to sort and refine applicants into a polished list of potential candidates. The AI used was trained on existing recruitment data, which looked at patterns contained in resumes submitted to Amazon job postings over the course of ten years. Amazon’s models were informed by previous successful candidates, which were dominantly men, meaning that this experimental hiring tool was trained to recruit more male candidates. The hiring tool promoted a less diverse talent pool, penalizing key phrases that differentiated women in lists of resumes (e.g. “softball” as an extracurricular activity).

The dangers of hiding protected classes

To maneuver around the adoption of AI tech in recruitment, companies have incorporated manual methods to eliminate bias, through activities such as striking out sensitive information on resumes – such as age, gender, race, education. When these results are then uploaded and built into alternative models, they no longer include protected classes of data.

This sounds like a responsible approach, and an easy way to implement diversity protocols, but even if companies hide these protected classes from their datasets, algorithms still rely on other categories of data – say, zip codes, or “softball” – which have their own biases. Counterintuitively, these efforts often result in a recruiting process more biased than where it began.

Making bias legible

We recognize the risks of relying on machine learning in the hiring process, and the potential for bias in applying algorithms to identify talent. However, we believe that the real risk is neglecting data that would make these biases legible. For precisely this reason, step one in our modeling approach is to make biases legible, so that we can then mitigate them. We’re confident that machine learning can be applied responsibly to the labor market because of the work that we and others are doing to mitigate the risk of bias.

AdeptID’s three-tiered approach

Over the past several months, the team at AdeptID has built a “Pyramid” of what will be our Ethical AI Framework.

-

At a base level, we need to address Algorithmic Bias.

-

Once we’ve accomplished this, we move onto our second phase, which is Addressing Systematicity, or correlated errors.

-

Lastly, this framework needs to be responsibly governed, and requires a democratic Governance Model.

Let’s keep talking

AI, machine learning, algorithms… whatever we call them, they have tremendous potential to improve talent outcomes at scale provided they are applied responsibly. We’re excited to have this conversation with stakeholders (talent, employers, training providers) and fellow practitioners alike.

For press inquiries, please contact [email protected].